Unity just launched a closed beta for visionOS support which developers can apply for.

Unity's support for visionOS was first announced alongside Vision Pro in early June. Acknowledging the existing Unity AR/VR development community, Apple said "we know there is a community of developers who have been building incredible 3D apps for years" and announced a "deep partnership" with Unity.

Porting Unity VR or AR experiences that run in a 'Full Space' on visionOS, meaning ones that don't support multitasking, is relatively straightforward. You use a similar build chain to iOS, where Unity interfaces with Apple's XCode IDE. Rec Room is confirmed as coming to Vision Pro for example, with minimal changes.

But building AR apps in Unity which can run in the visionOS 'Shared Space' alongside other apps is very different, and introduces several important restrictions developers need to be aware of.

Content in the Shared Space is rendered using Apple's RealityKit framework, not Unity's own rendering subsystem. To translate Unity mesh renderers, materials, shaders, and particles to RealityKit, Unity developed a new system it calls PolySpatial.

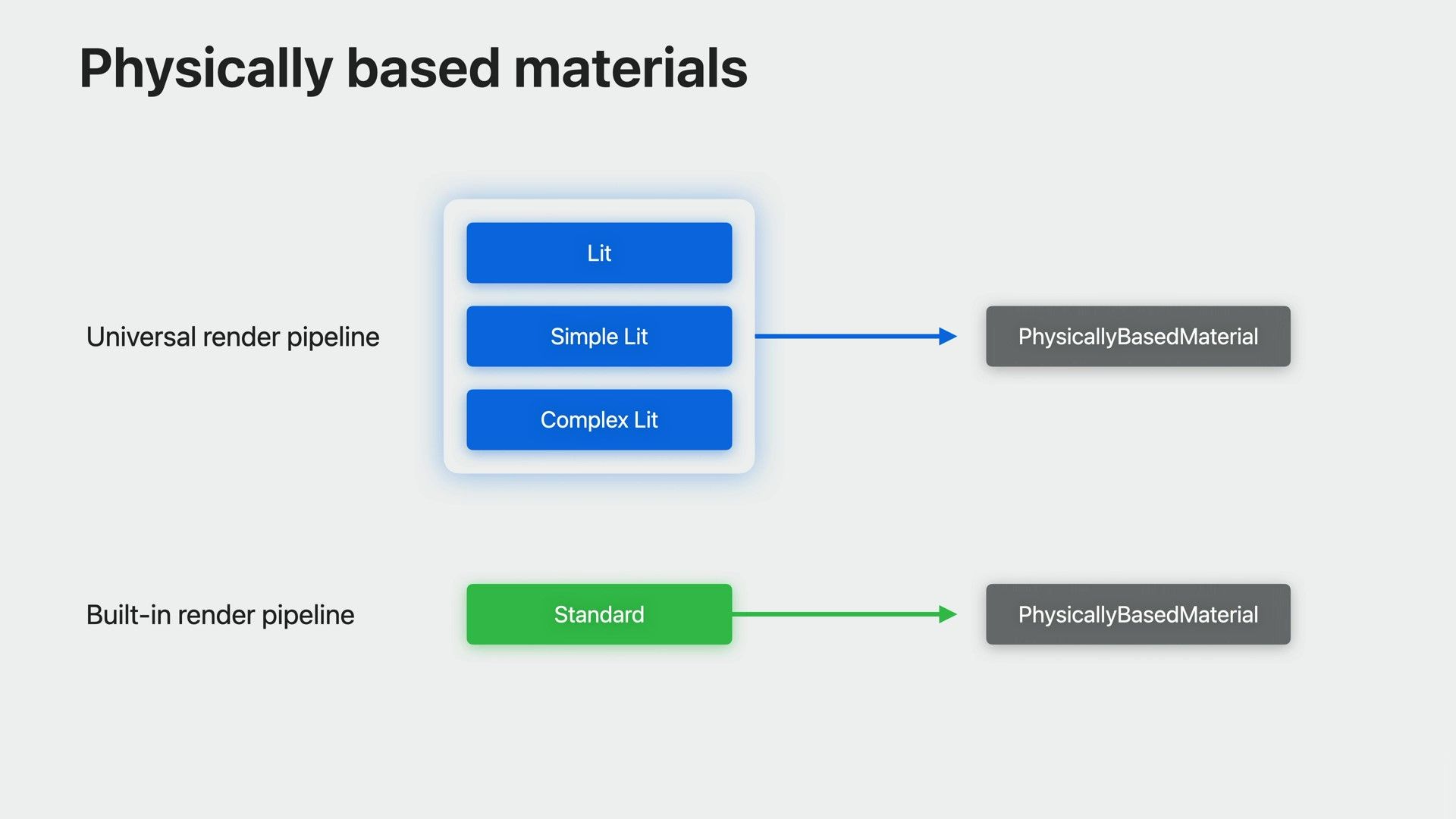

PolySpatial only supports certain materials and shaders though. Of the included materials, for the Universal Render Pipeline (URP) it supports the Lit, Simple Lit, and Complex Lit shaders, while for the Built-in Render Pipeline it only supports the Standard shaders. Custom shaders and material types are supported, but only through the Shader Graph visual tool, not handwritten shaders.

PolySpatial does have a unique advantage though: you can enter play mode directly to the headset, rather than needing to rebuild each time. Unity says this should significantly reduce iteration testing time.

Interested developers can apply to be included in the Unity visionOS support closed beta by filling in this form.

via Mint VR